An Overview of Sampling

Chapter 10 of the textbook, “Introduction to Statistics in Psychology,” focuses on the key concepts of samples and populations and their role in inferential statistics, which allows researchers to generalize findings from a smaller subset of data to the entire population of interest.

- Population: The entire set of scores on a particular variable. It’s important to note that in statistics, the term “population” refers specifically to scores, not individuals or entities.

- Sample: A smaller set of scores selected from the entire population. Samples are used in research due to the practical constraints of studying entire populations, which can be time-consuming and costly.

Random Samples and Their Characteristics

The chapter emphasizes the importance of random samples, where each score in the population has an equal chance of being selected. This systematic approach ensures that the sample is representative of the population, reducing bias and increasing the reliability of generalizations.

Various methods can be used to draw random samples, including using random number generators, tables, or even drawing slips of paper from a hat . The key is to ensure that every score has an equal opportunity to be included.

The chapter explores the characteristics of random samples, highlighting the tendency of sample means to approximate the population mean, especially with larger sample sizes. Tables 10.2 and 10.3 in the source illustrate this concept, demonstrating how the spread of sample means decreases and clusters closer to the population mean as the sample size increases.

Standard Error and Confidence Intervals

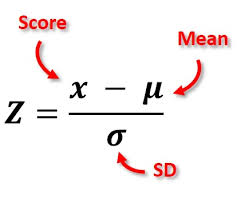

The chapter introduces standard error, a measure of the variability of sample means drawn from a population. Standard error is essentially the standard deviation of the sample means, reflecting the average deviation of sample means from the population mean.

- Standard error is inversely proportional to the sample size. Larger samples tend to have smaller standard errors, indicating more precise estimates of the population mean.

The concept of confidence intervals is also explained. A confidence interval represents a range within which the true population parameter is likely to lie, based on the sample data. The most commonly used confidence level is 95%, meaning that there is a 95% probability that the true population parameter falls within the calculated interval .

- Confidence intervals provide a way to quantify the uncertainty associated with inferring population characteristics from sample data. A wider confidence interval indicates greater uncertainty, while a narrower interval suggests a more precise estimate.

Key Points from Chapter 10

- Understanding the distinction between samples and populations is crucial for applying inferential statistics.

- Random samples are essential for drawing valid generalizations from research findings.

- Standard error and confidence intervals provide measures of the variability and uncertainty associated with sample-based estimates of population parameters.

The chapter concludes by reminding readers that the concepts discussed serve as a foundation for understanding and applying inferential statistics in later chapters, paving the way for more complex statistical tests like t-tests .