Chi-square is a statistical test widely used in media research to analyze relationships between categorical variables. This essay will explain the concept, its formula, and provide an example, while also discussing significance and significance levels.

Understanding Chi-Square

Chi-square (χ²) is a non-parametric test that examines whether there is a significant association between two categorical variables. It compares observed frequencies with expected frequencies to determine if the differences are due to chance or a real relationship.

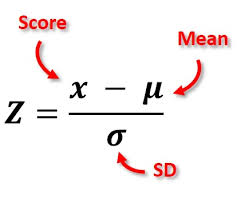

The Chi-Square Formula

The formula for calculating the chi-square statistic is:

$$ χ² = \sum \frac{(O – E)²}{E} $$

Where:

- χ² is the chi-square statistic

- O is the observed frequency

- E is the expected frequency

- Σ represents the sum of all categories

Example in Media Research

Let’s consider a study examining the relationship between gender and preferred social media platform among college students.

Observed frequencies:

| Platform | Male | Female |

|---|---|---|

| 40 | 60 | |

| 30 | 20 | |

| TikTok | 30 | 70 |

To calculate χ², we first determine the expected frequencies for each cell, then apply the formula.

To calculate the chi-square statistic for the given example of gender and preferred social media platform, we’ll use the formula:

$$ χ² = \sum \frac{(O – E)²}{E} $$

First, we need to calculate the expected frequencies for each cell:

Expected Frequencies

Total respondents: 250

Instagram: 100, Twitter: 50, TikTok: 100

Males: 100, Females: 150

| Platform | Male | Female |

|---|---|---|

| 40 | 60 | |

| 20 | 30 | |

| TikTok | 40 | 60 |

Chi-Square Calculation

$$ χ² = \frac{(40 – 40)²}{40} + \frac{(60 – 60)²}{60} + \frac{(30 – 20)²}{20} + \frac{(20 – 30)²}{30} + \frac{(30 – 40)²}{40} + \frac{(70 – 60)²}{60} $$

$$ χ² = 0 + 0 + 5 + 3.33 + 2.5 + 1.67 $$

$$ χ² = 12.5 $$

Degrees of Freedom

df = (number of rows – 1) * (number of columns – 1) = (3 – 1) * (2 – 1) = 2

Significance

For df = 2 and α = 0.05, the critical value is 5.991[1].

Since our calculated χ² (12.5) is greater than the critical value (5.991), we reject the null hypothesis.

The result is statistically significant at the 0.05 level. This indicates that there is a significant relationship between gender and preferred social media platform among college students in this sample.

Significance and Significance Level

The calculated χ² value is compared to a critical value from the chi-square distribution table. This comparison helps determine if the relationship between variables is statistically significant.

The significance level (α) is typically set at 0.05, meaning there’s a 5% chance of rejecting the null hypothesis when it’s actually true. If the calculated χ² exceeds the critical value at the chosen significance level, we reject the null hypothesis and conclude there’s a significant relationship between the variables[1][2].

Interpreting Results

A significant result suggests that the differences in observed frequencies are not due to chance, indicating a real relationship between gender and social media platform preference in our example. This information can be valuable for media strategists in targeting specific demographics[3][4].

In conclusion, chi-square is a powerful tool for media researchers to analyze categorical data, providing insights into relationships between variables that can inform decision-making in various media contexts.

Citations:

[1] https://datatab.net/tutorial/chi-square-distribution

[2] https://www.statisticssolutions.com/free-resources/directory-of-statistical-analyses/chi-square/

[3] https://www.scribbr.com/statistics/chi-square-test-of-independence/

[4] https://www.investopedia.com/terms/c/chi-square-statistic.asp

[5] https://en.wikipedia.org/wiki/Chi_squared_test

[6] https://statisticsbyjim.com/hypothesis-testing/chi-square-test-independence-example/

[7] https://passel2.unl.edu/view/lesson/9beaa382bf7e/8

[8] https://www.bmj.com/about-bmj/resources-readers/publications/statistics-square-one/8-chi-squared-tests