The dependent t-test, also known as the paired samples t-test, is a statistical method used to compare the means of two related groups, allowing researchers to assess whether significant differences exist under different conditions or over time. This test is particularly relevant in educational and psychological research, where it is often employed to analyze the impact of interventions on the same subjects. By measuring participants at two different points—such as before and after a treatment or training program—researchers can identify changes in outcomes, thus making it a valuable tool for evaluating the effectiveness of educational strategies and interventions in various contexts, including first-year university courses.

Notably, the dependent t-test is underpinned by several key assumptions, including the requirement that the data be continuous, the observations be paired, and the differences between pairs be approximately normally distributed. Understanding these assumptions is critical, as violations can lead to inaccurate conclusions and undermine the test’s validity.

Common applications of the dependent t-test include pre-test/post-test studies and matched sample designs, where participants are assessed on a particular variable before and after an intervention.

Overall, the dependent t-test remains a fundamental statistical tool in academic research, with its ability to reveal insights into the effectiveness of interventions and programs. As such, mastering its application and interpretation is essential for first-year university students engaged in quantitative research methodologies.

Assumptions When conducting a dependent t-test, it is crucial to ensure that certain assumptions are met to validate the results. Understanding these assumptions can help you identify potential issues in your data and provide alternatives if necessary.

Assumption 1: Continuous Dependent Variable The first assumption states that the dependent variable must be measured on a continuous scale, meaning it should be at the interval or ratio level. Examples of appropriate variables include revision time (in hours), intelligence (measured using IQ scores), exam performance (scaled from 0 to 100), and weight (in kilograms).

Assumption 2: Paired Observations The second assumption is that the data should consist of paired observations, which means each participant is measured under two different conditions. This ensures that the data is related, allowing for the analysis of differences within the same subjects.

Assumption 3: No Significant Outliers The third assumption requires that there be no significant outliers in the differences between the paired groups. Outliers are data points that differ markedly from others and can adversely affect the results of the dependent t-test, potentially leading to invalid conclusions.

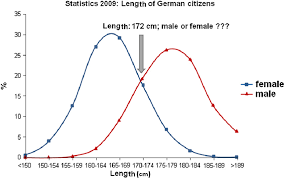

Assumption 4: Normality of Differences The fourth assumption states that the distribution of the differences in the dependent variable should be approximately normally distributed, especially important for smaller sample sizes (N < 25)[5]. While real-world data often deviates from perfect normality, the results of a dependent t-test can still be valid if the distribution is roughly symmetric and bell-shaped.

Common applications of the dependent t-test include pre-test/post-test studies and matched pairs designs. Scenarios for Application Repeated Measures One of the primary contexts for using the dependent t-test is in repeated measures designs. In such studies, the same subjects are measured at two different points in time or under two different conditions. For example, researchers might measure the physical performance of athletes before and after a training program, analyzing whether significant improvements occurred as a result of the intervention.

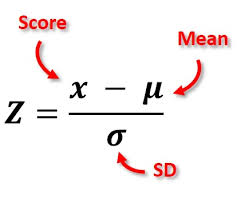

Hypothesis Testing In conducting a dependent t-test, researchers typically formulate two hypotheses: the null hypothesis (H0) posits that there is no difference in the means of the paired groups, while the alternative hypothesis (H1) suggests that a significant difference exists. By comparing the means and calculating the test statistic, researchers can determine whether to reject or fail to reject the null hypothesis, providing insights into the effectiveness of an intervention or treatment.